Traditionally phonological theory employs discrete and limited number of units to describe how human speech is structured at the cognitive level. More specifically, it studies how a closed set of phonemes are distributed and organized into words within a language system. However, speech, as a physical process, involves various anatomical structures that produce sound, and this process is “continuous and context-dependent” (Goldstein & Fowler, 2003, p.160), varying in many articulatory and aerodynamic parameters. Therefore, it seems problematic to use the discrete phonological symbols to account for the physical processes, as a direct relationship is lacking. Articulatory Phonology (henceforth AP), in this context, offers a fundamentally different theory to account for speech at both the cognitive level and the physical levels. This theoretical framework has been developed by Catherine Browman and Louis Goldstein since the 1980s (Browman & Goldstein, 1986, 1990a, 1990b, 1992, 1995). This entry provides a brief and simplified overview of the three core components within AP. Readers are strongly encouraged to refer to the original articles and book chapters listed in the references to gain a comprehensive understanding of AP.

Within this framework, an atomic unit – the gesture – is employed to capture both the phonological and physical properties of speech. Each articulatory gesture functions as both a unit of information and a discrete action of constriction involving one of the vocal tract's constricting organs, such as the lips, tongue tip, tongue body, velum, or glottis.

As a unit of information, the presence, specific constriction location, and/or degree of constriction of a gesture can distinguish between different utterances. As an action unit, each gesture entails the formation and release of a constriction within the vocal tract. Gestures are characterized as dynamic systems with specific temporal and spatial properties, which can be defined through task dynamics (Saltzman, 1986; Saltzman & Kelso, 1987; Saltzman & Munhall, 1989). In the task dynamic model, a gesture also refers to the articulators – such as lips and tongue tip - or tract variables involved in forming a constriction in the vocal tract.

Eight tract variables are identified: LP (lip protrusion) and LA (lip aperture) for the LIP gesture, TTCL (tongue tip constrict location) and TTCD (tongue tip constrict degree) for the TT (tongue tip) gesture, TBCL (tongue body constrict location) and TBCD (tongue body constrict degree) for the TB (tongue body) gesture, as well as VEL (velic aperture) for the Velum gesture and GLO (glottal aperture) for the Glottis gesture. These tract variables specify the constriction goals in terms of location and degree for oral gestures, as well as the level of openness for velum and glottis gestures.

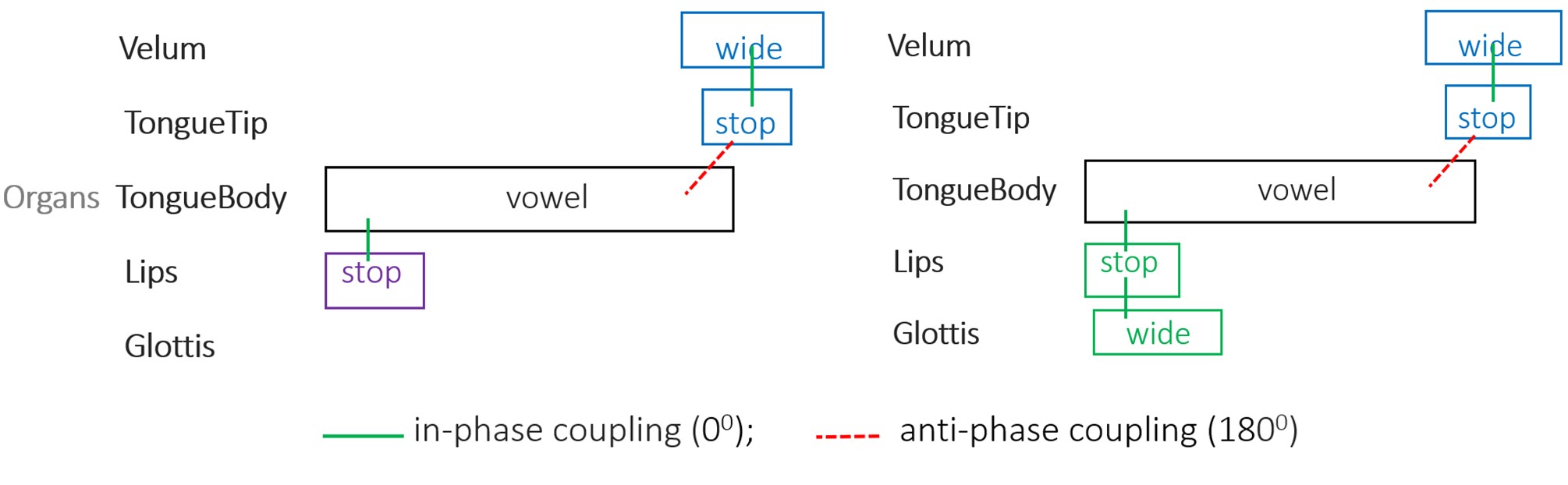

In AP, the smallest phonological unit is the gesture, which combines to form vowel gestures and consonant gestures. Certain vowels and consonants can be represented by combination of multiple gestures; for example, the consonant [pʰ] corresponds to a combination of a LIP gesture and a Glottis gesture. A gestural score represents the coordinated timing and organization of these gestures over time. Examples of gestural score can be seen in Figure 1.

The concept of gesture has been extended to a suprasegmental feature by Gao (2008), proposing that lexical tone may also be composed of gestures or a combination of gestures. Within her work, two invariant tone (T) gestures, aimed at High (H) and Low (L) pitch levels, have been proposed within the framework of AP. The four lexical tones of Mandarin Chinese can be modeled using either a single T gesture, or a combination of the two. The actual articulators for tone gestures should be physiological structures, such as the cricothyroid (CT muscle) angle, sterno-hyoid distance, or subglottal pressure (McGowan & Saltzman, 1995), etc. Due to the limited understanding of their precise coordination during frequency modulation, fundamental frequency is treated as both the articulator and the goal in the model.

Another central claim within AP is that gestures are dynamic events, which are coordinated pairwise through coupling relations (Browman & Goldstein, 1990b, 1992). The most stable, and thus most common, coupling relation is the in-phase (or 0°) mode, in which the two gestures are activated synchronously. The anti-phase (or 180°) coupling mode is considered the second most stable, where two gestures are coordinated sequentially. However, under certain conditions, other coupling modes are also possible.

Additionally, according to the ‘C-V coupling hypothesis’ (Goldstein et al., 2006), syllable structure is viewed as emerging from the coupling modes between C(onsonant) gesture and V(owel) gesture. The onset C gesture and the V gesture are considered in-phase coupled, whereas the coda C gesture and V gesture are seen as anti-phase coupled. For syllables with complex structures, such as CCVC, which involve onset consonant clusters, the coupling relations between gestures may compete, as suggested by the competitive coupling hypothesis (Browman & Goldstein, 2000).

The Gestural Score, a theoretical representation, has been utilized to illustrate the gestural composition of a given utterance. It visually displays the coordinated structure of gestures as a function of time, as conceptualized within AP. Each gesture is depicted as a box, with the (horizontal) placement of these boxes determined by the coordination relations among the gestures. Figure 1 (left) presents the gestural score and the intergestural phasing relations for the English word ban /ban/. The onset C gesture (LIP for /b/) and the V gesture (TB for /a/) are in-phase coupled at 0 degrees, as indicated by a solid line, meaning that these two gestures are activated at roughly the same time. The coda consonant (/n/) consists of two gestures, the TT gesture and the Velum gesture, which are in-phase coupled with each other. Intergesturally, however, only the TT gesture is coupled with the V gesture (TB), and these are in a sequential relation, being anti-phase coupled (at 180 degrees), represented by the dotted line. The gestural score for the English word pan /pan/ (Figure 1 right) is nearly identical to that of ban, with the primary difference being that the onset C gesture is represented by a combination of the Glottis gesture and the LIP gesture, which are in-phase coupled.

Browman and Goldstein (1995) argue that gestural analysis can be applied to account for utterances in any language, addressing both within-language and cross-language differences. The following are some examples illustrate how variations in gestural constellations define phonological contrasts: 1) the presence or absence of a gesture as exemplified in Figure 1; 2) the use of different articulator or tract variable for a given gesture, such as the lips in pan versus the tongue tip in tan to achieve the syllable-initial consonantal closure; 3) variations in the constriction parameter values of the same gesture, for example, the different degrees of tongue tip constriction (TTCD) in tip vs. sip; 4) two gestural constellations may be composed of the same set of gestures but differ in their coupling relations, as seen in pan and nap.

The third key component of AP is dynamic specification. Each gesture is associated with a planning oscillator (or clock), which triggers the gesture's activation. Unlike the simple point attractor that governs individual gestures, the clock operates as a periodic attractor and functions as a dynamical system. The production of a gestural constriction can be modelled as a mass-spring dynamical system, with fixed parameters such as target, stiffness, and damping coefficients (Goldstein et al., 2006). The relative phasing of these clocks, and thus the timing of gesture activation, is controlled by coupling the clocks to one another.

On the basis of gestural analysis and task dynamics (Saltzman, 1986; Saltzman & Kelso, 1987; Saltzman & Munhall, 1989), attempts have been made to model speech production (Saltzman & Munhall, 1989; Browman & Goldstein, 1992; Nam & Saltzman, 2003). This model introduces two levels: intergestural coordination and interarticulator coordination. The former, which can be considered the planning level, involves defining a set of gestures along with their activation intervals and relative timing through a coupling graph. It then sends motor command into the interarticulator level. At the interarticulator level, speech articulators, guided by the dynamic parameter specifications of the gesture set, coordinate to generate speech output.

Earlier work by Browman and Goldstein primarily applied AP to account for speech in American English. However, this framework has since been expanded to various other languages, including French (Kühnert et al., 2006), Greek (Katsika, 2012), Italian (Hermes et al., 2012), Korean (Son et al., 2007), Lhasa Tibetan (Hu, 2012), Romanian (Marin & Pouplier, 2014), Russian (Zsiga, 2000) , and Tashlhiyt Berber (Hermes et al., 2011). AP has also been adapted to prosodic domains, such as lexical tones in Mandarin (Gao, 2008) and pitch accents in German, Catalan (Grice et al., 2012), and American English (Iskarous et al., 2024). Additionally, AP has been applied to second language speech (Zsiga, 2003; Yanagawa, 2006) as well as speech from children with speech disorders (Namasivayam et al., 2020; Hagedorn & Namasivayam, 2024).

Browman, C. P., & Goldstein, L. (1986). Towards an articulatory phonology. Phonology Yearbook, 3, 219–252.

Browman, C. P., & Goldstein, L. (1990a). Gestural specification using dynamically defined articulatory structures. Journal of Phonetics, 18, 299–320.

Browman, C. P., & Goldstein, L. (1990b). Tiers in Articulatory Phonology with some implications for casual speech. In J. Kingston & M. E. Beckman (Eds.), Papers in Laboratory Phonology I: Between the Grammar and Physics of Speech (pp. 341–376). Cambridge, UK: Cambridge University Press.

Browman, C. P., & Goldstein, L. (1992). Articulatory Phonology: An overview. Phonetica, 49, 155–180.

Browman, C. P., & Goldstein, L. (1995). Dynamics and Articulatory Phonology. In T. van Gelder & R. F. Port (Eds.), Mind as Motion (pp. 175–193). Cambridge, MA: MIT Press.

Browman, C. P., & Goldstein, L. (2000). Competing constraints on intergestural coordination and self-organization of phonological structures. Bulletin de la Communication Parlée, 5, 25–34.

Goldstein, L., & Fowler, C. A. (2003). Articulatory phonology: A phonology for public language use. In Phonetics and phonology in language comprehension and production: Differences and similarities (pp. 159–207). Berlin: Walter de Gruyter.

Goldstein, L. M., Byrd, D., & Saltzman, E. (2006). The role of vocal tract gestural action units in understanding the evolution of phonology. In M. Arbib (Ed.), From action to language: The mirror neuron system (pp. 215–249). Cambridge, UK: Cambridge University Press.

Saltzman, E. (1986). Task dynamic co-ordination of the speech articulators: A preliminary model. In H. Heuer & C. Fromm (Eds.), Generation and modulation of action patterns (pp. 129–144). Berlin: Springer-Verlag.

Saltzman, E., & Kelso, J. A. S. (1987). Skilled actions: A task dynamic approach. Psychological Review, 94(1), 84–106.

Saltzman, E., & Munhall, K. (1989). A dynamical approach to gestural patterning in speech production. Ecological Psychology, 1(4), 333–382.

Gao, M. (2008). Mandarin tones: An Articulatory Phonology account (PhD Thesis). Yale University, New Haven, CT.

Hagedorn, C., & Namasivayam, A. (2024). Articulatory Phonology and speech impairment. In M. Ball, F. Perkins, N. Müller, & S. Howard (Eds.), The Handbook of Clinical Linguistics (2nd ed., pp. 333–349). Hoboken, NJ: Wiley Blackwell.

Mücke, D., Nam, H., Hermes, A & Goldstein, L. (2012). Coupling of tone and constriction gestures in pitch accents. In P. Hoole, L. Bombien, M. Pouplier, C. Mooshammer, & B. Kühnert (Eds.), Consonant clusters and structural complexity (pp. 205–230). Berlin/Boston: De Gruyter Mouton.

Hermes, A., Ridouane, R., Mücke, D., & Grice, M. (2011). Kinematics of syllable structure in Tashlhiyt Berber: The case of vocalic and consonantal nuclei. In Proceedings of the 9th International Seminar on Speech Production (pp. 1–6).

Hu, F. (2012). Tonogenesis in Lhasa Tibetan. In P. Hoole, L. Bombien, M. Pouplier, C. Mooshammer, & B. Kühnert (Eds.), Consonant clusters and structural complexity (pp. 231–256). Berlin/Boston: De Gruyter Mouton.

Iskarous, K., Cole, J., & Steffman, J. (2024). A minimal dynamical model of intonation: Tone contrast, alignment, and scaling of American English pitch accents as emergent properties. Journal of Phonetics, 104, 101309.

Katsika, A. (2012). Coordination of prosodic gestures at boundaries in Greek (PhD Thesis). Yale University, New Haven, CT.

Kühnert, B., Hoole, P., & Mooshammer, C. (2006). Gestural overlap and C-center in selected French consonant clusters. In Proceedings of the 7th Speech Production Seminar (pp. 327). Ubatuba, Brazil.

Marin, S., & Pouplier, M. (2014). Articulatory synergies in the temporal organization of liquid clusters in Romanian. Journal of Phonetics, 42, 24-36.

McGowan, R. S., & Saltzman, E. (1995). Incorporating aerodynamic and laryngeal components into task dynamics. Journal of Phonetics, 23, 255-269.

Namasivayam, A. K., Coleman, D., O’Dwyer, A., & Van Lieshout, P. (2020). Speech sound disorders in children: An articulatory phonology perspective. Frontiers in Psychology, 10, 2998.

Son, M., Kochetov, A., & Pouplier, M. (2007). The role of gestural overlap in perceptual place assimilation in Korean. In J. Cole & J. I. Hualde (Eds.), Papers in laboratory phonology (Vol. 9, pp. 504-534). Berlin: Mouton de Gruyter.

Yanagawa, M. (2006). Articulatory timing in first and second language: A cross-linguistic study (PhD Thesis). Yale University, New Haven, CT.

Zsiga, E. C. (2000). Phonetic alignment constraints: Consonant overlap and palatalization in English and Russian. Journal of Phonetics, 28(1), 69-102.

Zsiga, E. C. (2003). Articulatory timing in a second language: Evidence from Russian and English. Studies in Second Language Acquisition, 25(3), 399-432.