The first "phonetic" studies describing speech sounds date back two thousand years: the Prātiśākhya, which are Vedic hymns (sacred songs), are meticulously described. It was important to pronounce the sacred texts correctly, as their recitation was said to have miraculous effects that would have been destroyed by a single mistake in pronouncing these precious words. (Baudry, 1864). In France, as in other countries, the study of language pronunciation is linked to grammar. In 1659, Father Laurent Chiflet, in the second part of his work Essai d'une parfaite grammaire de la langue françoise, pioneered the description of "good" French pronunciation (Chiflet, 1659). By giving indications on the pronunciation of vowels and consonants, this work introduced the first terms to describe sound production, with notions of long vowels, open vowels and diphthongs. The term "phonetics" most likely appears in the work of a Danish Egyptologist, Georges Zoega (1797), who uses the Latin term phoneticarum (p.454) to indicate that hieroglyphic characters, although designed to express things that do not sound, seem to be closely linked to the language of the people who used them to write, an idea later confirmed by Champollion (Tillman, 2006). In the mid-nineteenth century, scientists concerned with the production of language sounds described vowels and consonants on the basis of physiological phenomena linked to speech. In 1863, Alexander Melville Bell's Principles of Speech and Dictionary of Sounds used physiology to describe the sounds of English. It was also at this time that physicists became enthusiastic about the representation of sound waves, to study the nature of sound waves and with a desire to fix them on a support; the first sound representations of this type came from tuning forks. Alexander Graham Bell's and Clarence J Blake work on the ear phonautograph provided the first known representation of a speech wave.

At the same time, instruments such as Thomas Edison's Phonograph, Edouard-Léon Scott Martinville's Phonotaugraph and Charles Cros's Paléophone (Hémardinquer, 1930) also appeared.

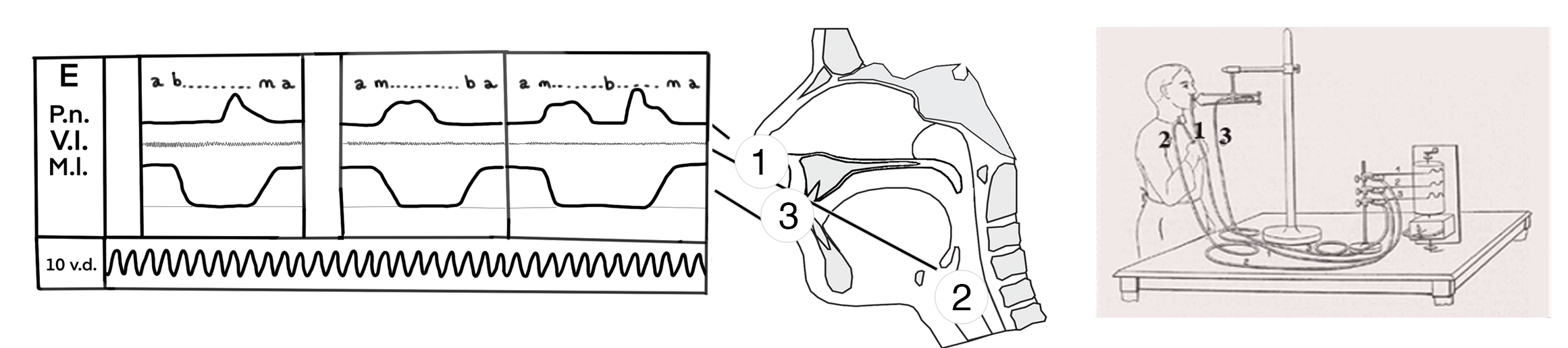

However, it was Jules Etienne Marey who gave new impetus to the description of speech sounds. He proposed a method for graphically representing all experimental, physiological and biological phenomena (Marey, 1868). He entrusted the task of representing speech sounds to one of his students, Charles Léopold Rosapelly, who was assisted by Louis Havet, then secretary of the Société de Linguistique de Paris. Together, they produced the first instrumental graphic representations of lip movements, laryngeal vibrations and indirect movements of the soft palate (Rosapelly, 1876).

Instrumental phonetics was born. Abbé Pierre-Jean Rousselot followed this work very closely, seeing it as the starting point for physiological phonetics, which he called "experimental" from 1889 onwards (Rousselot, 1897). Rousselot believed that the ear alone was not sufficient for a perfect transcription of sounds. He wanted to turn this new approach into a science in its own right, on par with physics or biology. When you consider all the disciplines Rousselot took on to study speech sounds, it's easy to see why he was so keen to call it experimental phonetics. Rousselot took physics lessons from Edouard Branly, and was interested in Rudolph Koenig's instruments, in 1885, he met Charles Verdin, the builder of Marey's instruments, as well as Rosapelly, who introduced him to the principles of recording phonetic phenomena. Rousselot used existing instruments, but is also known to have built his own. In 1886, with the help of Jules Deseilligny, he created his first device, which he called the "Inscription électrique de la parole", and which was to be the starting point for Rousselot's kymograph. The kymograph, as conceived by Marey and Rousselot, underwent several evolutions and was used in phonetics laboratories until the 1960s. (Teston, 2010).

In his 1897 book, Rousselot was able to graphically study all the organs involved in the speech production, as well as provide a detailed acoustic description. When describing speech sounds, he distinguishes between the graphic method, which focuses on physical and physiological functions, and the acoustic method (Rousselot, 1897).

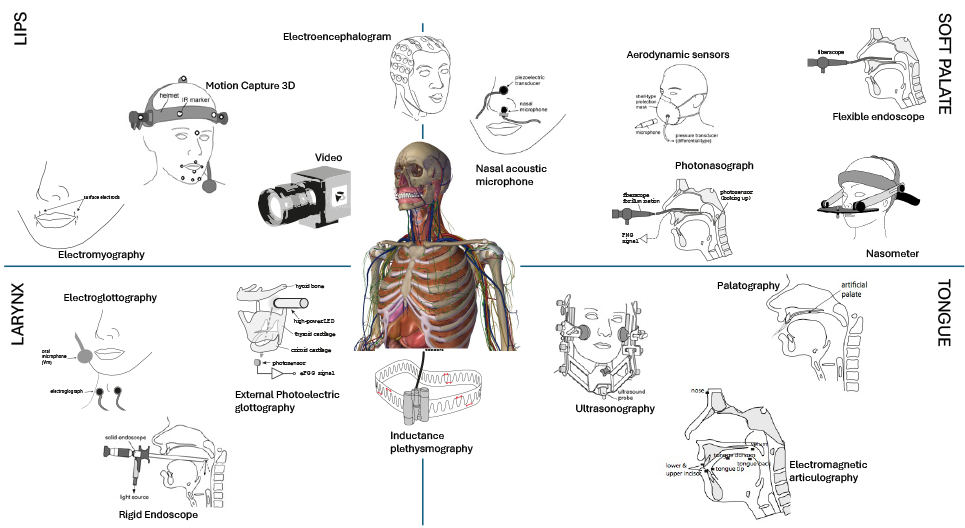

Instrumental phonetics, named experimental by Rousselot, has made a fundamental contribution to the description of speech sounds, bringing phoneticians closer to the engineering sciences. Its interaction with the medical world has been a major asset. The links forged with clinicians have given phonetics researchers access to instruments that were not necessarily available to them due to their invasive nature. Phonetics laboratories have developed multi-instrument, multi-parameter physiological speech exploration platforms to help visualize and measure respiratory function in the speech act, the dynamics of laryngeal articulators and the articulation of vocal tract organs linked to speech gestures. Coupled with acoustic recordings, instruments that enable electromyographic, electroglottographic and aerodynamic measurements, as well as electrical activity in the brain, have made it possible to increase our knowledge of phonetics, aid our understanding of language mechanisms and provide information on compensatory mechanisms.

The table shows the different stages of phonation and the different organs of speech, naming possible instruments for studying them:

| Level | Organ | Instrumental technique | Parameters measured/studied |

|---|---|---|---|

| 1: Breathing | Lung | Electromyography (EMG) |

Muscular activity |

| Inductance Plethysmography | Evaluating pulmonary ventilation | ||

| 2: Vibration | Larynx | Electroglottography (EGG) | Contact between the two vocal folds |

| Photoelectric glottography (PGG) | Degree of opening of the glottis | ||

| External Photoelectric glottography (ePGG) | Degree of opening of the glottis and degree of opening vocal folds | ||

| Rigid and flexible endoscope | Visualization of laryngeal components | ||

| 3: Resonators | Language | Ultrasonography (USG) | Visualization of contour of the tongue |

| Static and dynamic palatography | Degree of linguo-palatal contact | ||

| Electromagnetic articulography | Marker tracking in 3D dimensions, movements of the tongue | ||

| Velum | Nasal acoustic microphone, and Piezoelectric transducer | Nasal acoustic signal, nasalance ratio, intensity of skin surface vibrations | |

| Aerodynamic sensors, | Nasal and oral airflow, and air pressure | ||

| Flexible endoscope | Height of the nasal surface of the velum, lateral and posterior pharyngeal movements | ||

| Photonasography (PNG) | Opening of the velopharyngeal port | ||

| Electromagnetic articulography | Height of the velum | ||

| Lips and face | Video | Visualization | |

| Motion capture system | Marker tracking in 3D dimensions | ||

Electromyography (EMG) |

Muscular activity | ||

| Brain | Electroencephalogram | Cerebral activity | |

| All articulators in same time | Magnetic resonance imaging (MRI) | Organs activity |

To make the link with speech, these instruments will be synchronized as far as possible with the acoustic signal obtained using a microphone.

Baudry, F. (1864), De la science du langage et de son état actuel, Extrait de la Revue archéologique, Paris.

Bell, A.M. (1863), Principles of speech and dictionary of sounds, Washington, D. C: Volta Bureau.

Chiflet, L. (1659), Essay d'une parfaite grammaire de la langue françoise, Acologne: Pierre Le Grand.

Hémardinquer, P. (1930), Le phonographe et ses merveilleux progrès, Masson et Cie.

Marey, J.E. (1868), Du mouvement dans les fonctions de la vie. Leçons faites au Collège de France, Paris: G. Baillière.

Rosapelly, C.L. (1876), Inscriptions des mouvements phonétiques, in Physiologie expérimentale, Tome 2, Paris: Paul Dupont.

Rousselot, P.J. (1897), Principes de phonétique expérimentale, Paris & Leipzig: Welter éditeur.

Teston, B. (2010), Une petite histoire de l'analyse harmonique de la parole, XXVIIIème Journée d'Etude sur la Parole, Mons.

Tillman, H.G. (2006), Experimental and instrumental Phonetics: History, in Encyclopedia of Language & Linguistics (Second Edition), 2006.

Vaissière, J. Honda, K., Amelot, A. Maeda, S. Crevier-Buchman, L. (2010), Multisensor platform for speech physiology research in a phonetics laboratory. The Journal of the Phonetic Society of Japan, 14 (2), pp.65-78.

Zoegan G. (1797), De origine et usu obeliscorum ad pium sextum, Romae: Typis Lazzarinii.